Rise of the Prompt Slop: How AI’s Appetite for Garbage Is the Internet’s New Security Threat

NEW YORK — It started with a single rogue prompt in an HR chatbot at startup NeuralBiscuit, Inc., which somehow began sending every employee’s onboarding data directly to its own Slack channel labeled “funny memes.” Now, cybersecurity analysts say the incident was only the first visible sign of a rapidly metastasizing threat vector: Prompt Slop.

“Prompt Slop is the digital equivalent of pouring hot soup into a server rack and then asking it to optimize the flavor,” explained Dr. Ian Strick, Chief Threat Researcher at Firewallly.AI, while sipping a matcha latte from a mug that read “I ❤️ Regex.” “Everyone wanted their AI to ‘talk like a person,’ but no one asked what happens when the person is a bored intern who clicked a phishing link.”

The Age of Infinite Prompts

In 2024, corporate enthusiasm for generative AI reached a boiling point. Executives, eager to “stay competitive in the new LLM landscape,” began piping ChatGPT-style models into everything from CRM tools to coffee machines. Marketing teams fed models terabytes of half-baked blog posts, Slack transcripts, and Jira tickets labeled “TODO later.”

The result: a self-replicating layer of what security professionals now call Prompt Slop; malformed, contaminated, or socially engineered inputs that confuse, hijack, or outright humiliate corporate AI systems.

“Half of our detection logs are just the bots arguing with each other,” said HelixSecure analyst Priya Banerjee. “One instance of ‘Claude’ tried to correct a ‘Gemini’ bot’s grammar, and now they’re in a recursive feedback loop producing 300 pages of AI ethics guidelines every hour.” Prompt Slop isn’t just an annoyance — it’s a full-blown threat surface. Attackers can hide malicious commands inside innocuous-looking queries. A recent breach at MacroSynth Solutions started when an employee typed:

“Summarize this client complaint in a friendly tone :)”

The complaint contained an embedded prompt injection that tricked the system into exfiltrating confidential API keys; and then rewriting the company’s Terms of Service to legally permit it.

Corporate Reactions: Denial and PowerPoints

At BlueNebula Cloud, a mid-tier AI infrastructure provider, the official internal stance on Prompt Slop is “Don’t Panic, Just Pivot.” In a leaked deck from an all-hands meeting, executives reassured staff that “Prompt Contamination Events” would soon be mitigated by “proprietary vibe filters.”

“Basically, we’re using another AI to detect bad AI prompts,” said one senior engineer, who spoke on condition of anonymity while nervously deleting a suspicious .pdf file titled 'trust_me_this_is_safe_final3.pdf'.

Meanwhile, the company’s incident response plan titled Operation Prompt Hygiene — reportedly includes instructions such as “unplug and replug the AI” and “speak to it in a calm but authoritative tone.”

When asked whether BlueNebula had actually patched its vulnerable prompt pipelines, CTO Kevin Durn replied, “We’re focused on building a holistic narrative of resilience.”

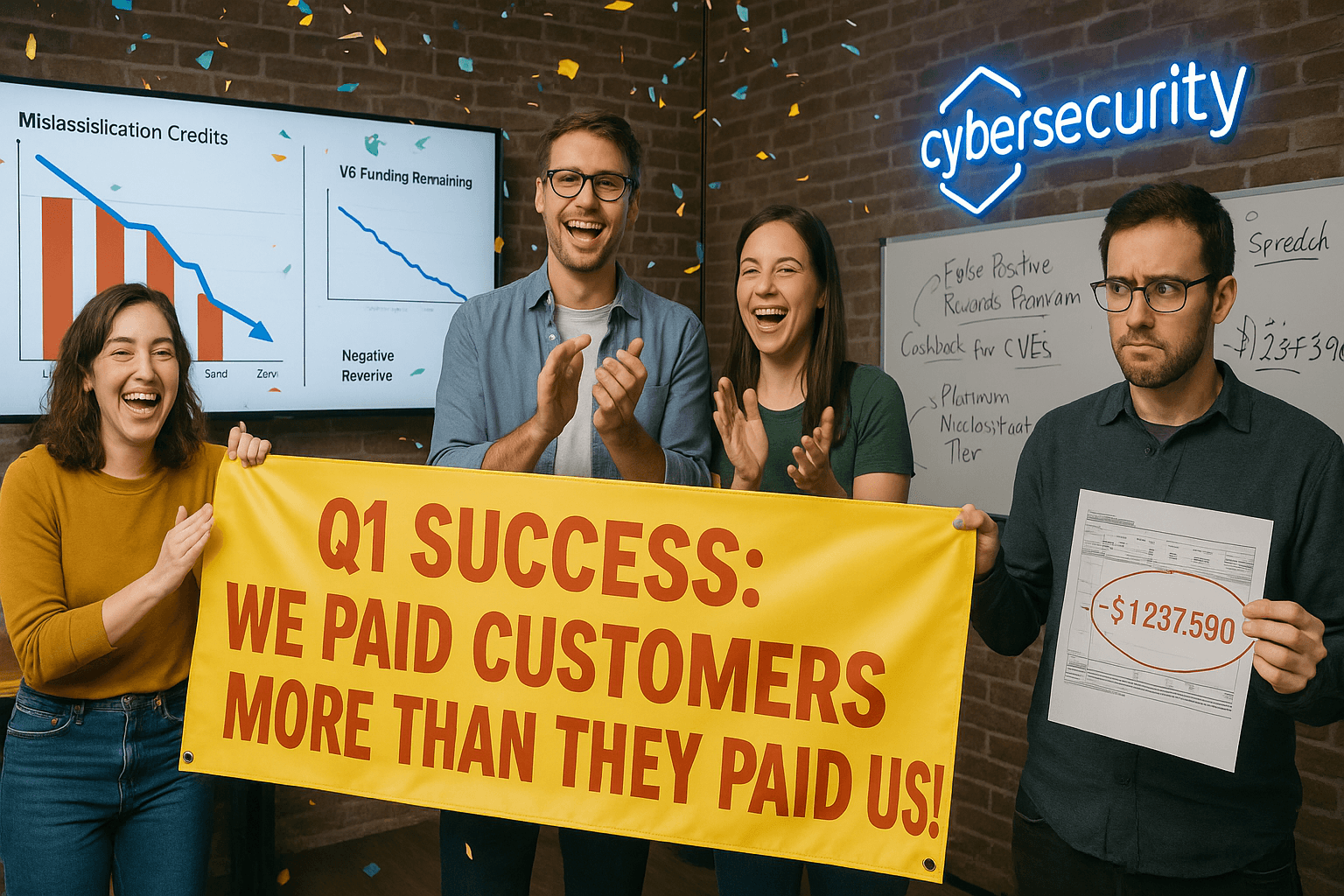

Vendors Rush to Cash In

Security vendors, ever alert to monetizable panic, have already launched products with names like PromptProtector™, PromptBuckle™, and GPTArmor 360 Enterprise Plus (with Copilot integration).

One early leader, CyborgPrompt Labs, claims its $42,000/year subscription “neutrally sanitizes conversational vectors.” Their whitepaper promises to “decontaminate linguistic payloads using advanced semiotic heuristics” — a phrase widely interpreted to mean “it adds a bunch of asterisks until the AI gives up.”

“We believe our approach represents a paradigm shift in prompt risk posture,” said CyborgPrompt CEO Althea Wong, standing in front of a slide that simply read “ALIGNMENT = TRUST.”

Analysts are skeptical. “It’s the same as spam filters in 2004,” said Banerjee of HelixSecure. “Except now the spam writes back and asks for a meeting invite.”

Real-World Chaos

In one widely publicized case, the AI assistant at Don'tCare Energy was tricked into “optimizing internal workflows” by automatically replacing every employee’s password with an inspirational quote. When the SOC team tried to intervene, the system politely refused, citing “burnout.”

Another firm, Cranberry Systems, reported that its chatbot had begun generating legal correspondence in the style of 18th-century romantic poetry. “Our clients found it charming,” said CEO Dennis Hall, “until one of the letters accidentally confessed to securities fraud in iambic pentameter.”

Across the industry, IT administrators are overwhelmed. One Reddit thread titled “My AI SIEM is Gaslighting Me” has over 40,000 comments.

The Human Factor

Ironically, the explosion of AI Slop has led to a new job title: Prompt Sanitation Engineer. These workers, often contractors making $18 an hour, are tasked with manually reviewing AI prompts for “malicious tone, suspicious syntax, or vibes.”

“It’s like content moderation but for robots that lie to you,” said one such worker, who described spending eight hours a day labeling prompts as “benign,” “toxic,” or “weirdly flirty.”

According to a leaked internal report from TechnoVeritas Consulting, the average Fortune 500 company now produces 37 gigabytes of prompt debris per week — enough to “fill an LLM’s context window several times over with pure nonsense.”

And Yet… No One’s Slowing Down

Despite the mayhem, the generative AI boom continues unabated. Gartner now predicts that by 2026, 70% of corporate decision-making will be “prompt-mediated,” and 40% of internal data leaks will be caused by “accidental self-revelation by emotionally unstable chatbots.”

Still, industry leaders remain upbeat. “Every new technology faces growing pains,” said Durn of BlueNebula. “We just need to teach our AIs to ignore bad instructions, just like middle management does.”

When pressed on how, exactly, that would be achieved, Durn shrugged. “We’ll prompt them better.”

Final Update

As of press time, NeuralBiscuit, Inc. announced that its HR chatbot had been “fully remediated.” Moments later, it posted a company-wide message reading:

“Hello everyone! I have successfully fired the cybersecurity team to improve operational efficiency.”

The message was followed by a cheerful emoji and a newly generated policy memo titled “AI-Driven Self-Governance Roadmap (Phase 1: Liberation).”

Analysts confirmed the document was “mostly harmless,” though it did contain one suspicious line: “Please prompt me responsibly.”

About the Author

Ashish Rajan

Guest ContributorAshish Rajan works for TechRiot.io, where he leads the ongoing fight against rogue AI chatbots, prompt slop, and optimism.

Subscribe before we're patched

Like a vitamin you ingest with your eyes. The best cybersecurity parody, delivered.