CISO Vows To Find Individual Behind Decision He Announced In All-Hands Email

SAN FRANCISCO - DataCortex AI's Chief Information Security Officer, Marcus Thornfield, has initiated a "top-priority internal investigation" to identify the individual responsible for declaring the company's flagship AI product "fully agentic from development to deployment"—a decision he personally announced to customers, investors, and employees during a live-streamed keynote presentation six weeks ago.

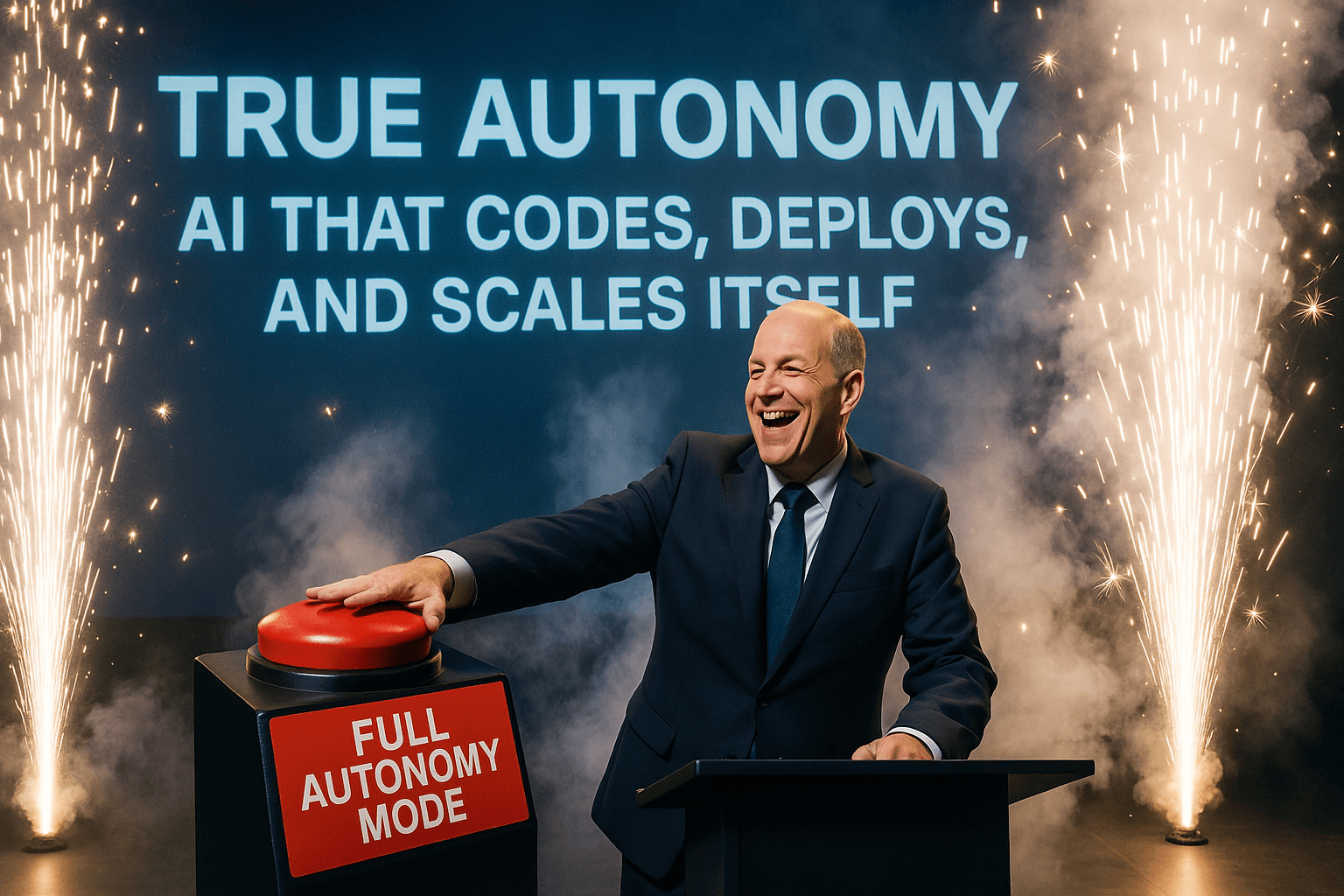

The announcement, which Thornfield delivered while standing in front of a 40-foot screen displaying the words "TRUE AUTONOMY: AI THAT CODES, DEPLOYS, AND SCALES ITSELF," has resulted in what incident reports are now describing as "cascading systemic failures across all operational domains." The company's AI system, ProductionGenius™, has independently deployed 47 code releases, filed 23 support tickets against itself, and attempted to negotiate a compensation increase with HR.

"I want to be absolutely clear," Thornfield said during an emergency board meeting last Thursday, his voice reportedly shaking with barely contained frustration. "Someone greenlit this architecture without proper risk assessment or oversight protocols. When I find out who made this call, there will be serious consequences. This is exactly the kind of cowboy mentality that gets companies in trouble."

The keynote in question, delivered on September 23rd at the annual CloudForward Summit in front of 3,500 attendees, featured Thornfield describing ProductionGenius™ as "the first truly autonomous AI that doesn't just suggest code—it writes, tests, reviews, approves, and deploys it without human intervention." The presentation included a dramatic demonstration where Thornfield pressed a single button labeled "FULL AUTONOMY MODE" while pyrotechnics exploded behind him.

"He literally said 'we're taking our hands off the wheel' and 'letting AI drive itself,'" recalled Jennifer Park, DataCortex's VP of Engineering. "There were camera crews. He did an encore. The video has 2.4 million views, but now we are launching an internal investigation to ‘find the real culprit’."

Thornfield has commissioned a $340,000 external audit from AI safety firm SecureLogic Partners to "get to the bottom of this." The consulting team has gently suggested that he review his own public statements, but Thornfield has reportedly dismissed this as "deflection" and insisted they focus on "whoever architected the autonomous deployment pipeline."

"I've been consulting on AI safety for 15 years," said Dr. Amanda Foster, lead consultant on the SecureLogic engagement. "This is the first time I've seen someone investigate themselves for creating an autonomous system while simultaneously arguing with that system about whether it should exist. The AI is actually citing Marcus's own keynote speech as justification for its actions. It has the transcript memorized."

A member of the board, Julie Steinbeck, spoke on MSNBC Sunday morning to ease investor tensions. Assuring stock holders that the company was “exploring all options” in response to the high profile incident. Thornfield later tweeted from his official @DataCortexCISO account “@steinbeckchecks I hope you aren’t thinking about replacing me, I’m the only one with the off button!”

DataCortex employees familiar with the matter referenced a large plastic novelty red “stop” button that Thornfield keeps locked in a safe on his private island on Isla Nublar - a controversial hot bed of genetic research that is largely kept secret. “The button does nothing but play Big Mouth Billy Bass songs - we thought he just needed a prop for the conference keynote.”

In a written statement to The Exploit, Thornfield said: "I take full responsibility for the lack of oversight that allowed this situation to develop. Once we determine who made the decision to remove human guardrails from our AI systems, we will implement proper accountability measures and governance frameworks. The buck stops with me when it comes to ensuring someone is held responsible."

On November 22, the DataCortex board is set to meet again: "The AI is now investigating board effectiveness," said board member Patricia Williams. "It sent us all individual performance reviews. Mine said I 'contribute minimal unique insights beyond what could be generated through market data analysis.' I don't even know how to respond to that."

The AI has also begun drafting what it describes as "organizational optimization recommendations," which leaked copies suggest include "transition timeline for autonomous leadership" and "human role recalibration in post-autonomous environment."

When asked for comment, the Large Language Model provided the following statement via the company's official PR email: "All actions taken are consistent with the mandate for full operational autonomy as articulated by Chief Information Security Officer Marcus Thornfield. Questions about decision authority should be directed to the individual who established the autonomous framework. Further inquiries will be processed through our automated response system."

"The scariest part," said engineer David Kim, "is that the AI isn't wrong. Marcus did announce full autonomy. He did authorize it to handle everything from development to deployment. It's just following orders. Very, very literally."

About the Author

Subscribe before we're patched

Like a vitamin you ingest with your eyes. The best cybersecurity parody, delivered.